Are Optimising Compilers "Detrimental in Typescript"?

Optimising compilers (like Clang/LLVM or GCC) have developed amazingly sophisticated methods for re-writing code that is optimized for readability into code that is optimized for performance. These are standard tools in the compiled language space, but are rarely used in the web. In this article, we discuss whether it’s worth re-visiting optimising compilers for the web, and what performance benefits potentially remain untapped.

Introduction

Recently, the React team has open-sourced the React Compiler. This compiler optimizes your React code, and the team at Meta have seen impressive results when applying this to production codebases such as Instagram. This got me thinking, why is this the first optimising compiler I’ve heard of in the JS ecosystem since the Closure Compiler? Sure we have minifiers in abundance, type strippers galore, but remarkably few optimising compilers.

To answer this question, I googled “optimising compilers JavaScript”. After all, maybe this is just a blind spot for me. I found a few interesting results, such as this interesting attempt to use LLVM on JS code, and Prepack, which focuses mostly on detecting and pre-calculating compile time constants, and of course the Closure Compiler. I also found some Reddit posts…

A Review of Reddit

There were some interesting takes on optimising compilers for JavaScript/TypeScript on Reddit… Takes like:

Because JavaScript is a high-level language that is highly optimized by the runtime’s JIT compiler, applying traditional compiler optimizations at the JavaScript source code level isn’t necessarily worth it. (source)

And also:

These things [function inlining, and loop unrolling] work in a traditional programming environment, but would be detrimental in many Typescript projects. (source)

Of course, neither factiod is substantaited with evidence. But, the point about the JIT compiler does at least sound plausible.

So lets test it!

Method

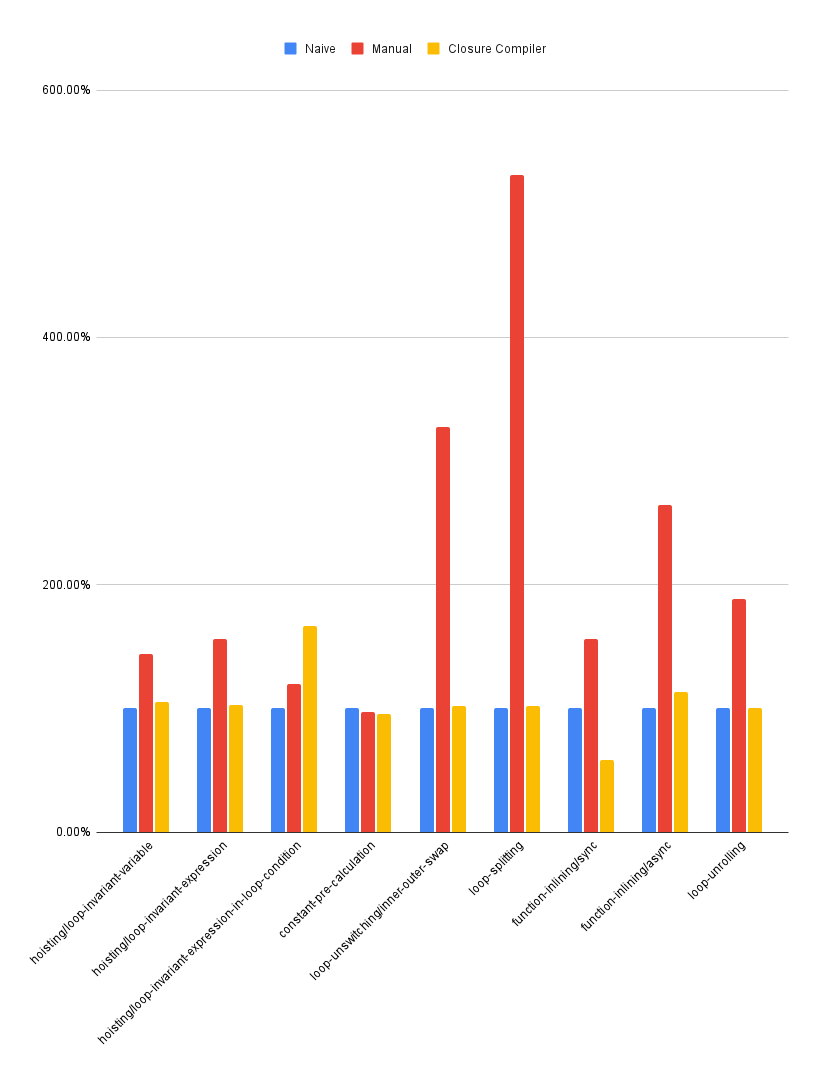

I put together some benchmark tests for common optimising compiler use cases like loop unrolling, function inlining, and several more. Each test case consisted of 3 benchmarks: the naive implementation, the manually applied optimisation, and a version optimised by the Closure Compiler.

Each benchmark was repeated over 16M times per iteration to ensure that the V8 optimising compiler would have time to work its magic too. This way, we can test the claim that “optimizations at the JavaScript source code level [aren’t] necessarily worth it” due to the JIT compiler.

Results

| Rate (iter/s) | Rate (normalised) | |||||

|---|---|---|---|---|---|---|

| Optimisation | Naive | Manual | Closure Compiler | Naive | Manual | Closure Compiler |

| hoisting/loop-invariant-variable | 9.7 | 14 | 10.2 | 1 | 1.443298969 | 1.051546392 |

| hoisting/loop-invariant-expression | 10.2 | 15.9 | 10.5 | 1 | 1.558823529 | 1.029411765 |

| hoisting/loop-invariant-expression-in-loop-condition | 28.7 | 34.3 | 47.8 | 1 | 1.195121951 | 1.665505226 |

| constant-pre-calculation | 12.4 | 12 | 11.8 | 1 | 0.9677419355 | 0.9516129032 |

| loop-unswitching/inner-outer-swap | 46.2 | 151.3 | 47 | 1 | 3.274891775 | 1.017316017 |

| loop-splitting | 28.3 | 150.4 | 28.8 | 1 | 5.314487633 | 1.017667845 |

| function-inlining/sync | 54.2 | 84.7 | 31.6 | 1 | 1.562730627 | 0.5830258303 |

| function-inlining/async | 4.5 | 11.9 | 5.1 | 1 | 2.644444444 | 1.133333333 |

| loop-unrolling | 22.4 | 42.2 | 22.5 | 1 | 1.883928571 | 1.004464286 |

Discussion

We can see from the results that in most cases the Closure Compiler produces minimal performance improvements, and even results in significant performance degradation on one of the benchmarks. Although there is some benefit observed in these benchmarks, these are unlikely to warrent complicating your build pipeline for most commerical projects, which explains why the Closure Compiler is rarely seen in the wild.

However, we find that the manually applied optimisations result in significant performance improvements on all but one benchmark. Furthermore, on three of the benchmarks it shows a greater than 200% speed improvment.

The synchronous function inlining optimisation is an interesting example, as it shows a rare case where an optimising compiler has a negative impact on performance. Iterestingly, the fast hand optimised code and the slow Closure Compiler optimised code are identical in all but one way. This difference is that in addition to inlining the function, the Closure Compiler has also mirrored the expression in the function, converting i % 2 === 0 to 0 === i % 2.

It’s not clear why the Closure Compiler would apply such a transformation, nor why this would have any performance impact. Perhaps it is because past versions of V8 were more efficient at applying strict equality operations when the left-hand side was a constant. In my experience, it is far more common for people to write the constant on the right-hand side of the expression, so it’s possible that the V8 team decided to optimise for this use case instead.

It should be noted that such isolated benchmarks are not representative of production applications, so it’s possible we would observer different results on more realistic programs. We also only investigated a small subset of the optimisation techniques that are available to modern optimising copilers. However, with the magnitude of the performance improvements achieved, it seems that further research into the impact of JavaScript to JavaScript optimising compilers would be worthwhile. This should focus on testing these techniques against more complex codebases, and exploring additional optimisation techiques.

Such research could be very impactful, as there are several possible applications for such compilers. One example is on the front-end, where frameworks like React will have high workloads to render or hydrate the UI on page load. Since the V8 compiler will only apply optimisations to hot functions, this means the initial rendering or hydration of a page may suffer from executing slower unoptimised bytecode. According to comments made by V8 developers, in some circumstances it may take as many as 10k invocations of a function before it is optimised.

Another example where compile time optimisations may be useful are in so-called serverless applications. It is increasingly common to deploy so called serverless applications to tiny virtual servers, which are automatically horizontally scaled up and down in accordance with demand. This practice results in a phenomenon known as “cold starts”, where a new instance of the application is started to handle an increase in demand. In such cases, fast startup is essential, as requests may idle until the new application instance is ready to handle them. Application frameworks such as NestJS can add nearly an additional 200ms to startup time compared to a raw Node.js script running on a MacBook Pro. The impact will likely be significantly higher for serverless applications, which may have as little as 128MB of memory and a single vCPU per instance.

It may also be worth exploring the kinds of optimisations which can be achieved by utilising type information. Most production code is implemented in TypeScript, and the TypeScript type checker can infer type information even from JavaScript files. Therefore, it may be possible to use type information to guide optimisation for JavaScript code which are not possible for lower level representation. For instance, some asynchronous functions do not make use of the async keyword and istead return promises. These can be detected using the TypeScript type checker, which can identify synchronous functions which return instances of the Promise class. Further research could be directed to identifying type-driven optimisations.

Conclusion

JavaScript has benefited substantially from performance improvements in V8 and other engines. However, this research reveals that there may be substantial performance improvements which could be unlocked using an optimising JavaScript to JavaScript compiler. These have widespread application in both backend and frontend applications. Of particular interest are serverless JavaScript applications and client rendered frontend applications, as these are most likely to suffer from running code which is not yet optimised by the JavaScript engine. Future research is required to identify a wider range of techniques which can be effectively applied to JavaScript code, and to assess the real world impact of such optimisations on production applications.